The ABC of Computational Text Analysis

#6 Learning Regular Expressions

Alex Flückiger

Faculty of Humanities and Social

Sciences

University of Lucerne

7 April 2022

Recap last Lecture

- well-solved assignment #1 🎊

- counting words 🔢

- particular words or entire vocabulary

- preprocessing and cleaning 🧼

Outline

- introducing regular expression ✨

- practicing the writing of patterns 🎢

Text as Pattern

Formal Search Patterns

How to extract all email addresses in a text collection?

Please contact us via info@organization.org.

---

For specific questions ask Mrs. Green (a.green@mail.com).

---

Reach out to support@me.ch👉 Solution: Write a single pattern to match any valid email adress

[A-Z0-9._%+-]+@[A-Z0-9.-]+\.[A-Z]{2,} # match any email address (case-insensitive)What are patterns for?

- finding 🔎

- extracting 🛠️

- removing/cleaning 🗑️

- replacing 🔁

… specific parts in texts

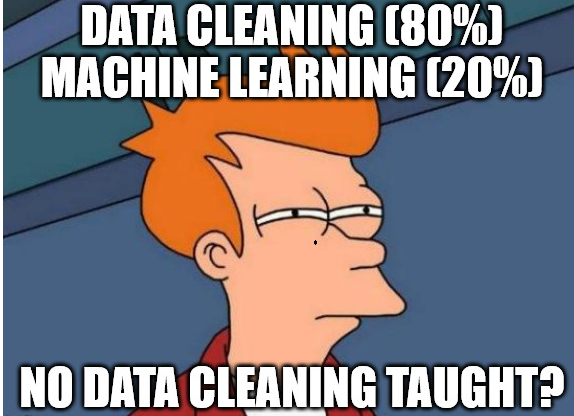

Data Cleaning is paramount!

What are Regular Expressions (RegEx)?

RegEx builds on two classes of symbols

- literal characters and strings

- letters, digits, words, phrases, dates etc.

- meta expressions with special meaning

- e.g.,

\wrepresents alphanumeric characters [Cc]o+l→ Col, col, Cool, coool …

- e.g.,

- akin to regular languages

Finding + Extracting

extended globally search for regular expression and print (egrep)

- tool to filter/keep matching lines only

# check a regular expression quickly

echo "check this pattern" | egrep "pattern"

egrep "yes" file.txt # search in a specific file

egrep -r "yes" folder # search recursively within folder

egrep "yes" *.txt # keep lines containing pattern (yes) across txt-files

egrep -i "yes" *.txt # dito, ignore casing (Yes, yes, YES ...)

egrep -v "noisy" *.txt # do NOT keep lines containing noisy

# extract raw match only to allow for subsequent counting

egrep -o "only" *.txt # print match only instead of entire line

egrep -h "only" *.txt # suppress file nameQuantifiers

repeat preceding character

X times

?zero or one+one or more*zero or any number{n},{m,n}a specified number of times

egrep -r "Bundesrath?es" # match old and new spelling

egrep -r "a+" # match one or more "a"

egrep -r "e{2}" # match sequence of two "e"⚠️ Do not confuse regex with Bash wildcards!

Character Sets

[...]any of the characters between brackets- any vowel:

[auoei] - any digit:

[0-9] - any letter:

[A-Za-z]

- any vowel:

[^...]any character but none of these (negation)- anything but the vowels:

[^auoei]

- anything but the vowels:

# match the capitalized and non-capitalized form

egrep -r "[Gg]rüne"

# match sequences of 3 vowels

egrep -r [aeiou]{3}

# extract all bigrams (sequence of two words)

egrep -rohi "[a-z]+ [a-z]+"Special Symbols

.matches any character (excl. newline)\escapes to match literal\.means the literal.instead of “any symbol”

\wmatches any alpha-numeric character- same as

[A-Za-z0-9_]

- same as

\smatches any whitespace (space, newline, tab)- same as

[ \t\n]

- same as

# match anything between brackets

egrep -r "\(.*\)"The power of .* …

matches any character any times

More Complex Examples

# extract basename of URLs

egrep -ro "www\.\w+\.[a-z]{2,}"

# extract valid email adresses (case-insensitive)

egrep -iro "[A-Z0-9._%+-]+@[A-Z0-9.-]+\.[A-Z]{2,}" **/*.txt

Combining RegEx with Frequency Analysis

something actually useful

# count political areas by looking up words ending with "politik"

egrep -rioh "\w*politik" **/*.txt | sort | uniq -c | sort -h

# count ideologies/concepts by looking up words ending with "ismus"

egrep -rioh "\w*ismus" **/*.txt | sort | uniq -c | sort -hStart simple,

add

complexity subsequently.

In-class: Exercise

- Use the command line to navigate to the local copy of the Github

repository KED2022 and make sure it is up-to-date with

git pull. Change in to the directorymaterials/data/swiss_party_programmes/txt. - Use

egrepto extract all uppercased words likeUNO, OECD, SPand count their frequency. - Use

egrepto extract all plural nouns with female endings e.g.Schweizerinnen(starting with an uppercase letter, ending withinnen, and any letter in between). Do the same for the male forms. Is there a qualitative or a quantitative difference between the gendered forms?

# Some not so random hints

piping with |

sort

uniq -c

egrep -roh **/*.txtIn-class: Solution

- Use

egrepto extract all uppercased words likeUNO, OECD, SPand count their frequency.egrep -roh "[A-Z]{2,}" **/*.txt | sort | uniq -c | sort -h

- Use

egrepto extract all plural nouns with female endings e.g.Schweizerinnen(starting with an uppercase letter, ending withinnen, and any letter in between). Do the same for the male forms. Is there a qualitative or a quantitative difference between the gendered forms?egrep -roh "[A-Z][a-z]+innen\b" **/*.txt | sort | uniq -c | sort -hegrep -roh "[A-Z][a-z]+er\b" **/*.txt | sort | uniq -c | sort -h(there is no way with regular expression to extract only nouns of the male form but notWasserand the like. For this, you have to use some kind of machine learning.)

Replacing + Removing

stream editor (sed)

- advanced find + replace using regex

sed "s/WHAT/WITH/g" file.txt

sedreplaces any sequence,tronly single symbols

echo "hello" | sed "s/llo/y/g" # replace "llo" with a "y"

# by setting the g flag in "s/llo/y/g",

# sed replaces all occurences, not only the first oneContextual Replacing

reuse match with grouping

- define a group with parentheses

(group_pattern) \1equals the expression inside first pair of parentheses\2expression of second pair- …

# swap order of name (last first -> first last)

echo "Lastname Firstname" | sed -E "s/(.+) (.+)/\2 \1/"

# matching also supports grouping

# match any pair of two identical digits

egrep -r "([0-9])\1"More Meta-Symbols

\bmatches word boundaryword\bdoes not matchwords

^matches begin of line and$end of line^Amatches onlyAat line start

|is a disjunction (OR)(Mr|Mrs|Mr\.|Mrs\.) Greenmatches alternatives

Greediness Trap

- greedy ~ match the longest string possible

- quantifiers

*or+are greedy - non-greedy by excluding some symbols

[^EXCLUDE_SYMBOLS]instead of.*

# greedy: an apple, other apple

echo "an apple, other apple" | egrep "a.*apple"

# non-greedy: an apple

echo "an apple, other apple" | egrep "a[^,]*apple"Assignment #2 ✍️

- get/submit via OLAT

- starting tomorrow

- deadline 15 April 2022, 23:59

- use forum on OLAT

- subscribe to get notifications

- ask friends for support, not solutions

In-class: Exercises I

- Use

egrepto extract capitalized words and count them. What are the most frequent nouns? - Use

egrepto extract words following any of these strings:der die das. Hint: Use a disjunction. - Do the self-check on the next slide.

- Use

sed -Eto remove the table of content, the footer and the page number in the programme of the Green Party. Check the corresponding PDF to get a visual impression and test your regular expression withegrepfirst to see if you match the correct parts in the document.

In-class: Solution I

- Use

egrepto extract capitalized words and count them. What are the most frequent nouns?egrep -roh "[A-Z][a-z]+" **/*.txt | sort | uniq -c | sort -h

- Use

egrepto extract words following any of these strings:der die das. Hint: Use a disjunction.egrep -roh "(der|die|das) \w+" **/*.txt

- Use

sed -Eto remove the table of content, the footer and the page number in the programme of the Green Party. Check the corresponding PDF to get a visual impression and test your regular expression withegrepfirst to see if you match the correct parts in the document.cat gruene_programme_2019.txt | sed "1,192d" | sed -E "s/^Wahlplattform.*2023$//g" | sed -E "s/^[0-9]+$//g"

In-class: Self-Check

equivalent patterns

a+ == aa* # "a" once or more than once

a? == (a|_) # "a" once or nothing

a{3} == aaa # three "a"

a{2,3} == (aa|aaa) # two or three "a"

[ab] == (a|b) # "a" or "b"

[0-9] == (0|1|2|3|4|5|6|7|8|9) #any digitIn-class: Exercise II

- Count all the bigrams (sequence of two words) using character sets and quantifiers. What about trigrams (three words)?

- Extract the words following numbers (also consider numbers like:

1'000, 1,000 or 5%). Then, count all the words while excluding the numbers themselves. Hint: Pipe another grep to remove the digits. - You are ready to come up with your own patterns…

In-class: Solution II

- Count all the bigrams (sequence of two words) using character sets

and quantifiers. What about trigrams (three words)?

egrep -hoir "\b[a-z]+ [a-z]+\b" | sort | uniq -c | sort -hegrep -hoir "\b[a-z]+ [a-z]+ [a-z]+\b" | sort | uniq -c | sort -h

- Extract the words following numbers (also consider numbers like:

1'000, 1,000 or 5%). Then, count all the words while excluding the numbers themselves. Hint: Pipe another grep to remove the digits.egrep -rhoi "[0-9][0-9,'%]+ [a-z]+" | egrep -io "[a-z]+" | sort | uniq -c | sort -h- Alternative:

egrep -rhoi "[0-9][0-9,'%]+ [a-z]+" | sed -E "s/[0-9][0-9,'%]+//g" | sort | uniq -c | sort -h

In-class: Exercise III

Since you know about RegEx, we can use a more sophisticated tokenizer to split a text into words. What is the difference between the old and new approach? Test it and check the helper page with

man.# new, improved approach cat text.txt | tr -sc "[a-zäöüA-ZÄÖÜ0-9-]" "\n" # old approach cat text.txt | tr " " "\n"

More Resources

required

- Ben Schmidt. 2019. Regular Expressions.

- Cheatsheet of this course

highly recommended

- Nikolaj Lindberg. egrep for Linguists.

online regular expression editor

- regex101 to write and check patterns